Big Data is big news. With almost every device now capable of generating data that might be uploaded and centrally stored, the amount of data available is growing exponentially; but data is not information and the sheer mass of data can prevent access to that subset which is meaningful. According to IBM, less than 1% of the data we generate each day is mined for valuable insights, and anybody involved in data mining knows how difficult it is to extract quality information even for that portion which is analysed. The Internet of Things (IoT) where every device contributes data will only exacerbate this problem.

The cost of not managing the data can also be profound. Infographic reckon that poor data across businesses and government costs the US economy $3.1 trillion a year. In the UK poor data quality will cost the UK’s big four supermarkets alone some $1 billion over the next five years.

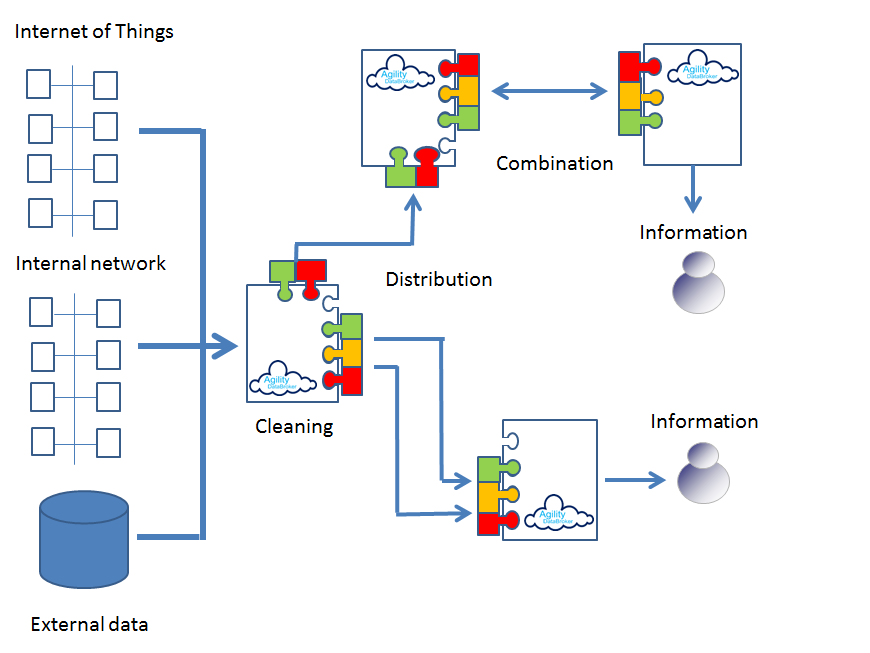

Agility DataBroker can be used to clean and filter data close to source and consolidate it in a form where the appropriate analyst can extract the information they require in an optimal manner.

Agility DataBroker is a framework that allows an organization to unlock the value of data through its controlled consolidation, analysis and distribution.