Agility DataBroker is a framework that allows an organization to unlock the value of data through its controlled consolidation, analysis and distribution.

"Every two days we create as much information as we did from the dawn of civilization up until 2003." – Eric Schmidt, Google

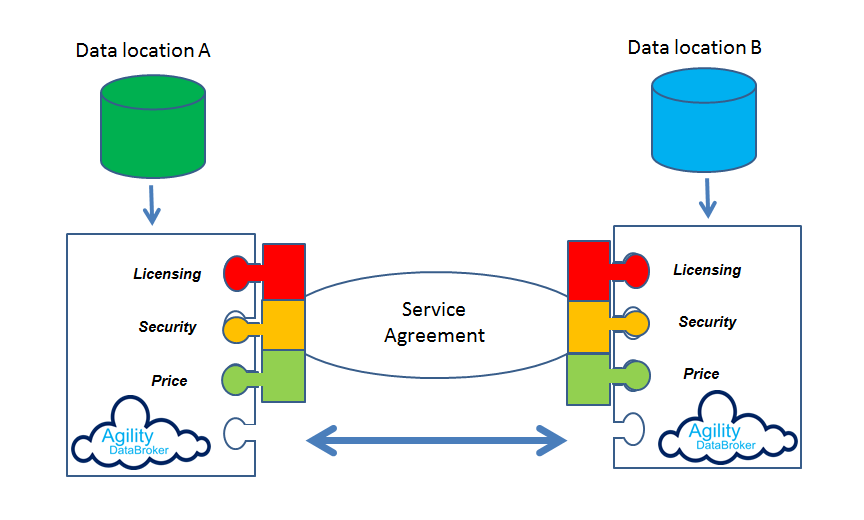

In a world where the connected human population and their electronic devices are generating large volumes of data, extracting value from that plethora in the form of meaningful information requires enabling technology. Agility DataBroker is a policy-led framework that allows for the consolidation of data from multiple sources, in different formats, inside and outside of the cloud, and ensures that the data is analysed and distributed in accordance with strictly enforced, but dynamically changeable service agreements.

It can be implemented to work with as few as two silos of information or be used across a large network.

Agility DataBroker facilitates the accessing of data from the cloud in accordance with the service agreements imposed by the data owners. Organisations who own data often need to have that data supplemented by additional data available from external sources in order to render it usable and productive. Those external owners can share their data under the control of easily changeable, granular policies which implement the service agreements they consider necessary.

Agility DataBroker assists organisations who generate large amounts of data to render that data into meaningful information. In a world where 90% of electronic data has been generated over the last two years (ScienceDaily) the technology available for rendering that data has not kept pace. Agility DataBroker can be used to clean data, extract relevant and meaningful datasets, distribute that data to multiple, authorised recipients and combine it with other external data sources to provide usable information.

Agility DataBroker provides a mechanism for data owners to publish the details of the data they hold to an “advertisement” portal which can be used by researchers to consolidate and analyse that data in conjunction with data from other sources to provide meaningful results. Duplication of data can be identified, meaningful data extracted and communication improved.