While it is true that current auditing standards have their roots firmly in the traditional audit process, where samples of transactions are examined rather than an entire body of data, auditors cannot ignore the proliferation of data, especially big data. Indeed, Ernst & Young believe that the end goal is to have intelligent audit applications that function within companies’ data centers and stream the results of proprietary analytics to audit teams. There are, however, significant obstacles to overcome in reaching that goal. Ernst & Young identified data capture as one barrier to successful integration. “Companies invest significantly in protecting their data, which makes the process of obtaining client approval for provisional data to auditors time consuming,” the article says. “In some cases, companies have refused to provide data, citing security concerns.” Other concerns are that auditors encounter hundreds of different accounting systems and multiple systems within the same company, all containing different sets and types of data, and that embracing big data will increase the complexity of data extraction and the volumes of data to be processed.

As the Association of Chartered Accountants said in the members magazine, "The quantity of data produced by and available to companies, the replacement of paper trails with IT records, cloud storage, integrated reporting, and growing stakeholder expectations for immediate information — any one of these alone would affect the auditing process, but big data is bringing them all, and more, at the same time.”

We believe that Agility DataBroker, a computer software framework from Arjuna, can provide a mechanism to solve current data-related problems, including the proliferation of data in the cloud, while establishing a framework that will allow movement towards the intelligent auditing of the future.

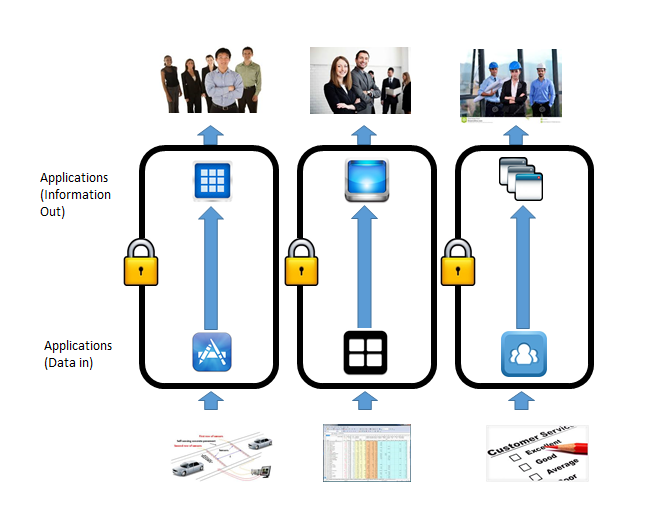

Most companies consist of a number of departments each of which needs to use data from a different perspective. As a result of this segmentation, data can be duplicated, errors can proliferate, money can be wasted and decisions are often made without access to the information embedded in the data available. Joined-up thinking is difficult without joined-up data to base it on.

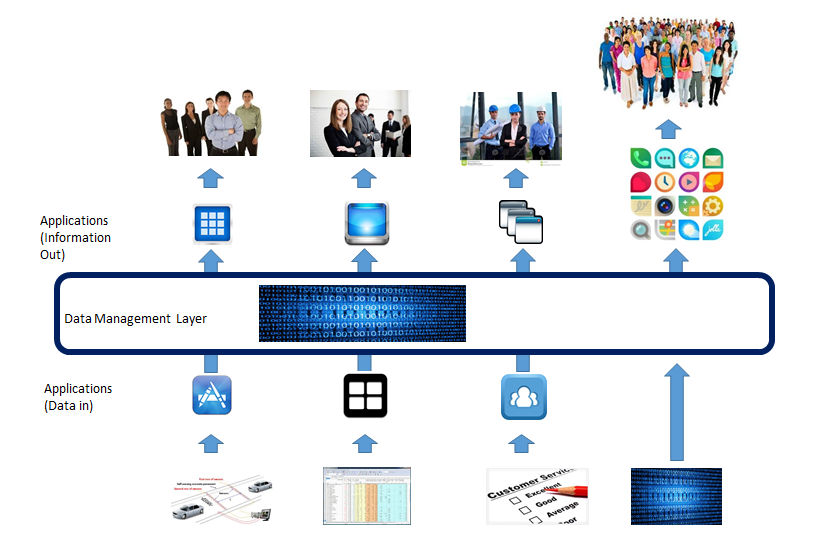

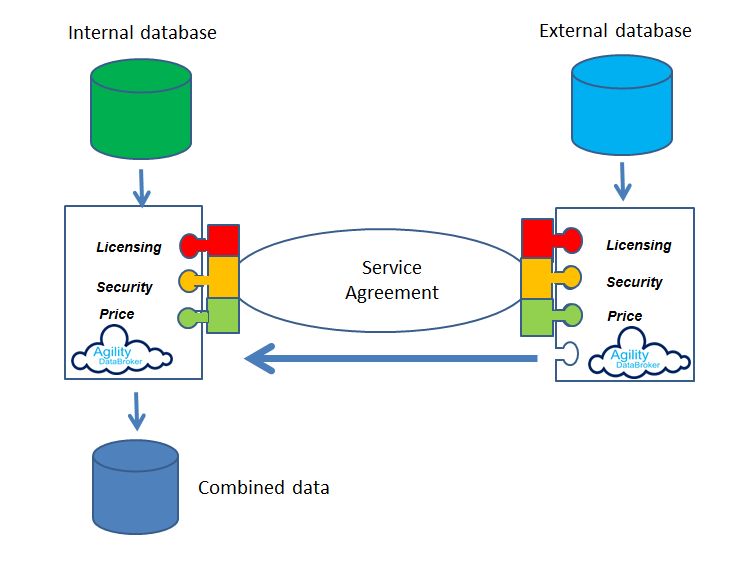

Agility DataBroker allows a company to specify what subset of data is required, where that data goes, who may access it under what circumstances and to combine that data with other data sources so that meaningful information can be extracted. Silos of information, in different forms, focused on specific functions such as CRM, supply chain management, and development data, can be collated through Agility DataBroker, and made available to users based on service agreements which enforce access policies. Data becomes available in a usable form by those who need access to it within the policies defined by the data owners. These policies can address all aspects of access e.g. security, pricing, licensing, and can be maintained dynamically within the software. Agility DataBroker can be initially used for integrating as few as two silos of information, but can scale to enterprise-wide use.

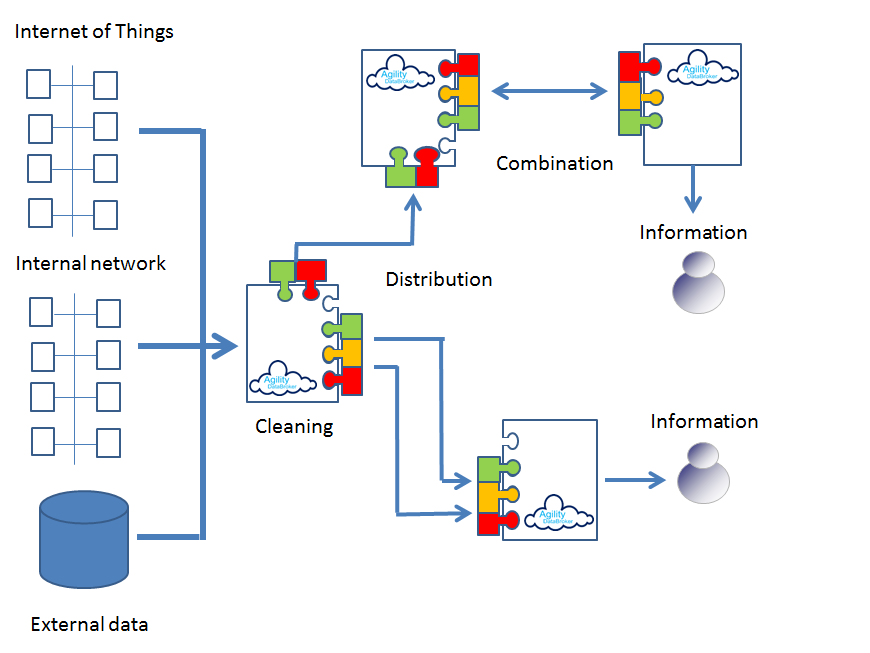

Many companies have inflexible legacy systems that impede data integration as well as silos of information stored in the cloud. Agility DataBroker can be used to extract data from the heterogeneous data sources, clean it, collate it, analyse it and distribute the resultant meaningful information to those users who need it, in the form that they require.

Data can be cross-checked or combined with external data sources to better extract meaningful information. In a world where the amount of data is growing faster than it can be used, Agility DataBroker provides a mechanism to improve services, cut down on waste, and generate information that is meaningful and usable.

No matter where the data is stored, or in what format, Agility DataBroker can be used to extract the data, clean it, combine it with other data sources, analyse it and present it to auditors in accordance with strictly enforced but dynamically customisable policies that implement the service agreements dictated by legislation, governance and good practice.

Agility DataBroker is a framework that allows an organization to unlock the value of data through its controlled consolidation, analysis and distribution. Agility DataBroker allows for data to be extracted from any combination of data sources, whether they sit in the Cloud or locally, under strictly enforced, but dynamically changeable service agreements which govern its use and deployment.