In a world of changing fortune for many financial institutions, the use of data to produce usable information has become paramount. According to Cap Gemini, financial institutions are struggling to profit from ever-increasing volumes of data. Their research shows that organizational silos are the single biggest barrier to success with big data, while a dearth of analytics talent, the high cost of data management, and a lack of strategic focus on big data are also major stumbling blocks. While most large financial institutions are relatively skilled in the manipulation of Big Data, we believe that Agility DataBroker, a computer software framework from Arjuna, can provide them with a mechanism to solve current data-related problems, while establishing a framework that will allow them to move towards the Smart Banking of the future.

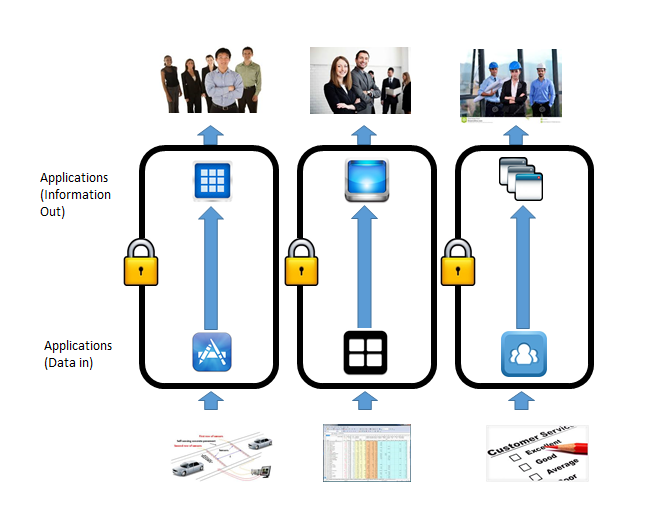

Most financial institutions consist of a number of departments each of which needs to use data from a different perspective. As a result of this segmentation, data can be duplicated, errors can proliferate, money can be wasted and decisions are often made without access to the information embedded in the data available. Joined-up thinking is difficult without joined-up data to base it on.

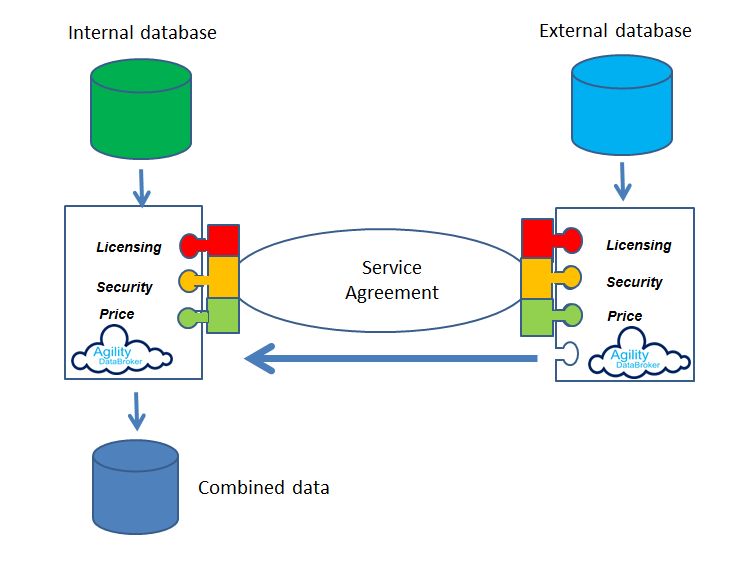

Agility DataBroker allows a financial Institution to specify what subset of data is required, where that data goes, who may access it under what circumstances and to combine that data with other data sources so that meaningful information can be extracted. Silos of information, in different forms, focused on specific functions such as CRM, portfolio management and loan servicing, can be collated through Agility DataBroker, and made available to users based on service agreements which enforce access policies. Data becomes available in a usable form by those who need access to it within the policies defined by the data owners. These policies can address all aspects of access e.g. security, pricing, licensing, and can be maintained dynamically within the software.

Agility DataBroker can be initially used for integrating as few as two silos of information, but can scale to an enterprise-wide application.

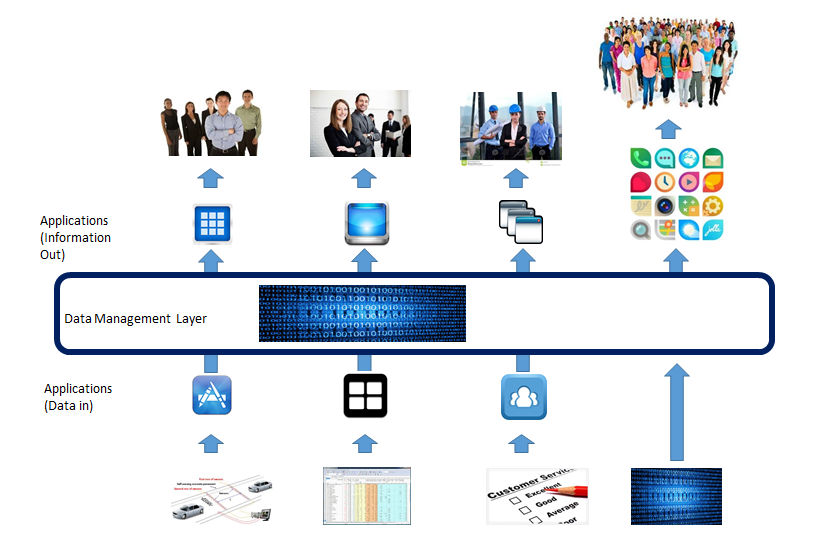

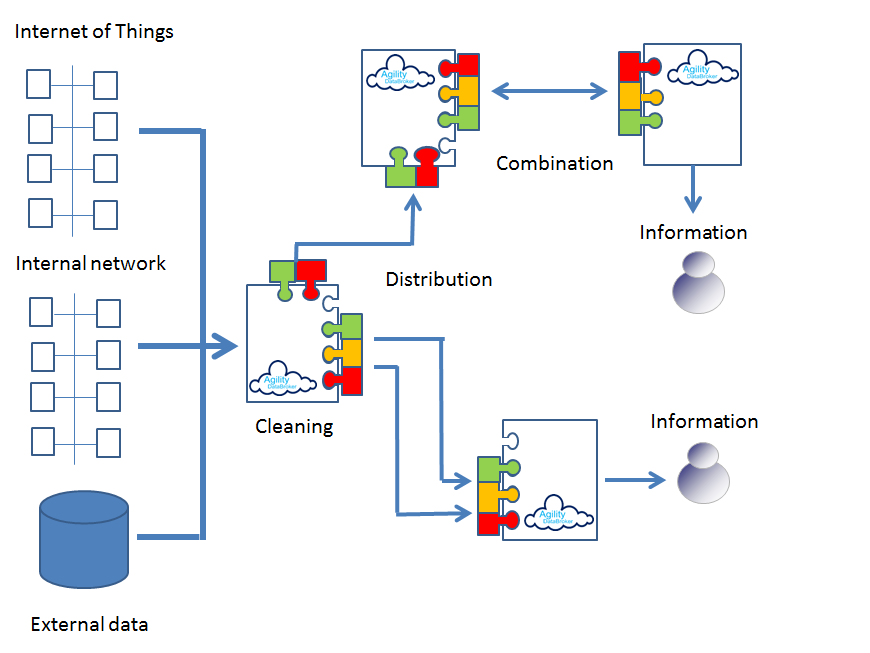

Many banks have inflexible legacy systems that impede data integration and prevent them from generating a single, seamless 360-degree view of the customer. Agility DataBroker can be used to extract data from the heterogeneous data sources, clean it, collate it, analyse it and distribute the resultant meaningful information to those users who need it, in the form that they require. Cap Gemini reckon that banks applying analytics to customer data have a four-percentage point lead in market share over banks that do not. The difference in banks that use analytics to understand customer attrition is even more stark at twelve-percentage points.

Data can be cross-checked or combined with external data sources to better extract meaningful information. For example, Agility DataBroker can be used to improve trader oversight by combining data sources that map out trader exposure. In a world where the amount of data is growing faster than it can be used, Agility DataBroker provides a mechanism to improve services, cut down on waste, and generate information that is meaningful and usable.

Agility DataBroker can help solve the major problems associated with data management in large financial institutions as determined by the Cap Gemini report:

No matter where the data is stored, or in what format, Agility DataBroker can be used to extract the data, clean it, combine it with other data sources, analyse it and present it to users in accordance with strictly enforced but dynamically customisable policies that implement the service agreements dictated by legislation, governance and good practice.

Agility DataBroker is a framework that allows an organization to unlock the value of data through its controlled consolidation, analysis and distribution. Agility DataBroker allows for data to be extracted from any combination of data sources, whether they sit in the Cloud or locally, under strictly enforced, but dynamically changeable service agreements which govern its use and deployment.