The typical, mid to large scale, contemporary organization is subject to pressures from legislative compliance in conjunction with security concerns and the search for best practice to find a way to best manage and monitor the use of company data. Legislation such as Sarbanes-Oxley or the European Data Directives puts the onus on publicly traded organizations to protect the integrity of data that contributes to the production of their financial reporting. It is difficult to be compliant if there is unrestricted access to data, or there are silos of data which are not integrated within the organisation. The use of technologies such as Dropbox allows individuals and departments to bypass the IT departments to distribute data in the cloud, resulting in a lack of a cohesive data management strategy across the enterprise. At the same time, the requirement for data to be used to produce usable information has increased in priority. The challenge to the Compliance Officer is how they can implement controls over data proliferation without reducing service to the user departments.

In particular, big data poses a big challenge for regulatory compliance. The big data compliance stakes could be critical for industries such as finance and health care that are subject to tight regulatory standards. The compliance challenge posed by big data is not just its sheer volume, but its complexity and lack of consistent structure. The key to ensuring compliance in dealing with big data is to track down and isolate the compliance-sensitive portions of that data e.g. those that compromise anonymity. As one compliance and security expert, Jon Heimerl, said, "big data stores are leading organizations to not worry enough about very specific pieces of information."

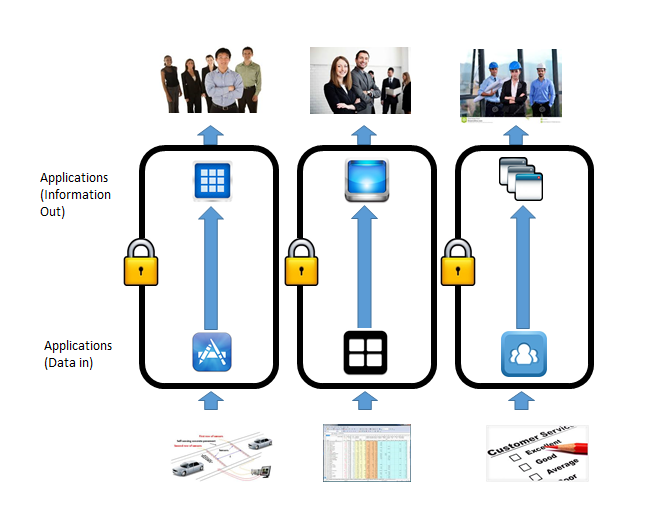

Most companies consist of a number of departments each of which needs to use data from a different perspective. As a result of this segmentation, data can be duplicated, errors can proliferate, money can be wasted and decisions are often made without access to the information embedded in the data available. Joined-up thinking is difficult without joined-up data to base it on.

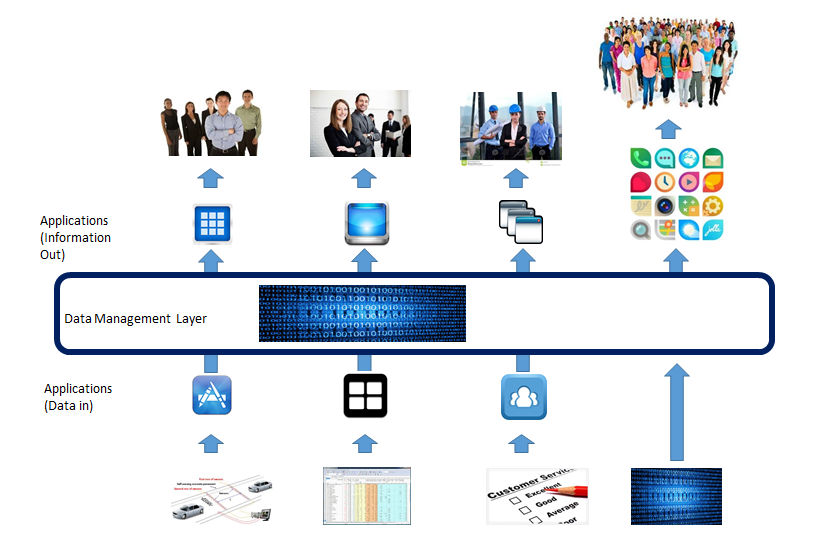

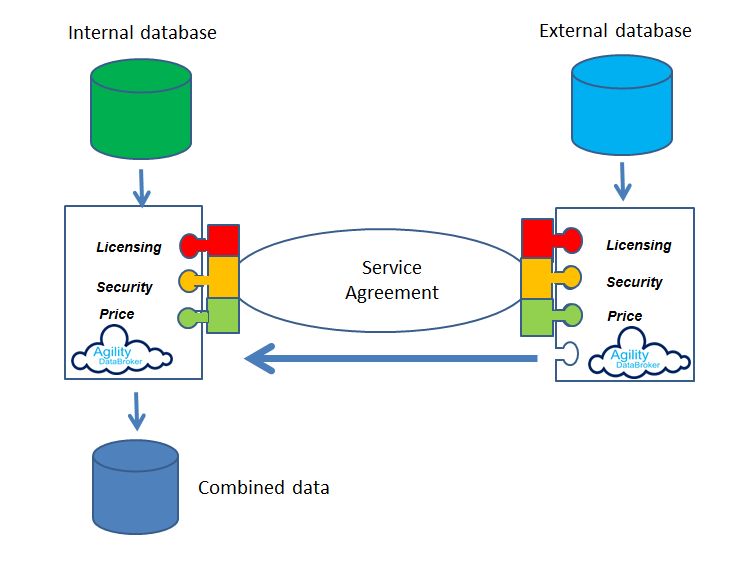

Agility DataBroker allows a company to specify what subset of data is required, where that data goes, who may access it under what circumstances and to combine that data with other data sources so that meaningful information can be extracted. Silos of information, in different forms, focused on specific functions can be collated through Agility DataBroker, and made available to users based on service agreements which enforce access policies. Data becomes available in a usable form by those who need access to it within the policies defined by the data owners. These policies can address all aspects of access, and can be maintained dynamically within the software. Agility DataBroker can be initially used for integrating as few as two silos of information, but can scale to enterprise-wide use.

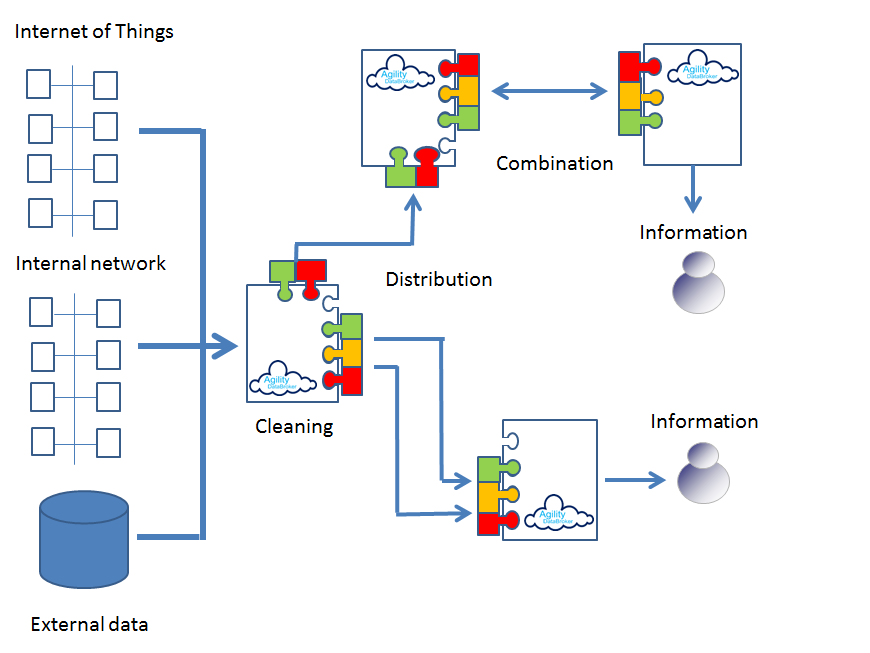

Many companies have inflexible legacy systems that impede data integration as well as silos of information stored in the cloud. Agility DataBroker can be used to extract data from the heterogeneous data sources, clean it, collate it, analyse it and distribute the resultant meaningful information to those users who need it, in the form that they require.

Data can be cross-checked or combined with external data sources to better extract meaningful information. In a world where the amount of data is growing faster than it can be used, Agility DataBroker provides a mechanism to improve services, cut down on waste, and generate information that is meaningful and usable. Applications such as Dropbox can be integrated into the environment so that user departments do not bypass compliance strategy while retaining the flexibility of using the cloud where desirable.

No matter where the data is stored, or in what format, Agility DataBroker can be used to extract the data, clean it, combine it with other data sources, analyse it and present it to users in accordance with strictly enforced but dynamically customisable policies that implement the service agreements dictated by legislation, governance and good practice.

Agility DataBroker is a framework that allows an organization to unlock the value of data through its controlled consolidation, analysis and distribution. Agility DataBroker allows for data to be extracted from any combination of data sources, whether they sit in the Cloud or locally, under strictly enforced, but dynamically changeable service agreements which govern its use and deployment.